Table of contents

It appears that the IAM and cybersecurity industry are all holding their breath for a specific, (and not entirely optimistic) milestone – the first major security breach where an AI agent leads to catastrophic privilege escalation.

One Identity's Alan Radford says that this is a consequence of an "AI goldrush", and I couldn't agree with this comparison strongly enough. We are seeing AI assistants graduate into AI agents – systems that act as "operational gatekeepers" with the autonomy to execute commands, manage workflows, and even modify production environments without a human in the loop.

What are the risks that can expose companies to this new type of a highly-privileged attack surface? And how much do we know, as of today, on how we can handle these threats?

Here’s my take on the main issues to keep an eye out for, and what organizations need to be aware of to minimize the risk of their occurrence.

By 2026 companies will roll out AI agents everywhere, and this changes how we think about cybersecurity breaches. These agents work non-stop like extra team members that security teams have always wanted. Security operations centers get buried under constant alerts. Agents sort through them fast, stop real threats right away, and let people focus on the big decisions instead of reacting all day. The same goes for IT and finance crews dealing with endless tickets and payment processes – agents handle the work quickly in ways humans just can't keep up with.

This speed fixes problems I've seen drag companies down for a long time. But it also opens a serious danger that worries me more than anything else right now. Reports show machine identities already outnumber real employees by 82 to 1. Every new agentic AI adds to that pile. When we give one of these agents wide access to key systems, APIs, or databases, we're creating something much riskier than a hacked employee login.

A badly set-up agent acts like a fast-moving threat from the inside. It never sleeps, never stops, and we trust it automatically. An attacker gets in through a sneaky prompt, a weak tool connection, or forgotten permissions. Once they're controlling it, everything happens at machine speed. The agent can jump around the network, grab private data, or even delete things before anyone spots the problem.

Companies push for this automation because the wins show up immediately. But too many skip the strict limits needed to keep it safe. We hand out too much access too freely. Without clear rules on what each agent can touch – and fast checks on what it's actually doing – that loose access becomes the easy path for the next big breach.

Generative AI exploded after ChatGPT hit 100 million users in just two months back in 2023. Employees saw how it could slash busywork and speed up decisions. They started using it quietly because official company tools lagged behind or didn't exist yet.

Microsoft's data shows 78% of people who use AI at work bring their own tools – BYOAI. They install chatbots like ChatGPT, Gemini, or Claude on their phones or home laptops. Browser extensions add AI quietly. Even built-in features in everyday business software get turned on without anyone in IT knowing. Employees chase faster results and more time for real thinking.

The actual trouble starts when we move to agentic AI – these autonomous agents that don't just answer questions. They take tasks and run with them: querying databases, calling APIs, updating records, or kicking off workflows all on their own. Without proper setup, one of these shadow agents slips in and gets access to sensitive data stores. It performs actions no one authorized or even sees coming.

By the time security spots something off, damage is done. Every prompt to a public model risks leaking confidential info like meeting notes, code, customer details, employee data. That input trains the model. It sits on someone else's servers, maybe in a country with weaker privacy rules. The same data could pop up for other users later.

I've seen how this creates compliance headaches under rules like GDPR. Fines hit hard when auditors ask for proof of control and there's none. Shadow agents also widen the attack surface. They pull in open-source code or third-party connections full of holes. Malicious prompts can hijack them. Or they just act unpredictably and break processes.

Companies rush to block obvious public chatbots, but agents hide better. They blend into normal activity under user accounts. Logs don't flag them clearly and traditional monitoring misses the dynamic steps they take.

The pattern worries me because the productivity pull is strong. People get results fast and don't wait for approval. But every unchecked agent adds invisible risk – data exposure, policy violations, even malware sneaking in through fake tools. Without visibility and strict rules from the start, shadow AI agents turn a helpful boost into the quiet setup for major cybersecurity breaches in 2026.

As of today, LLMs struggle to distinguish between instructions (what you told the agent to do) and data (the content it’s processing). This creates a massive opening for attackers, who can craft malicious inputs, like hidden text in a document, a poisoned email, or a manipulated query. This way an attacker can override the agent’s original programming.

Prompt injections on an overprivileged agent can be catastrophic. When agents have broad access to sensitive data or the ability to execute system commands, a successful injection could mean handing over the keys to the entire system.

I’ve recently seen an example of such an attack in Salesforce Agentforce. Attackers exploited an agent which was designed to process "Web-to-Lead" forms. Because the agent had broad, overprivileged read access to the entire CRM (intended to help it "contextualize" leads), it created a direct path for exfiltration. The situation was quickly resolved, as SalesForce explained, by re-securing the domain and launching patches to the platform’s AI agents so that they don’t proceed to untrusted URLs.

However, we can see in this example that the agent wasn’t hacked, but was tricked into using its legitimate, excessive permissions.

This security risk was included on OWASP’s 2026 ranking of the Top 10 For Agentic Applications (under code ASI02).

In this scenario, rather than install malware, attackers weaponize the agent’s legitimate toolkit against the host. The problem can happen when we grant an agent access to seemingly safe utilities, like a ping command to check network status. Security experts might assume that the agent understands the context of its actions. This, as I’ve mentioned earlier, is another problem about our misconceptions on LLMs. They aren’t able to distinguish actions to such a granular degree.

One of the scenarios OWASP shared is a coding assistant with a (seemingly) harmless tool for "checking internet connection". This tool could be manipulated to sneak data out. Similarly, an IT bot can be fooled into using its official administrative powers to steal sensitive logs.

I believe that the most terrifying part is that, since the AI is allowed to use these tools, your security alarms wouldn’t ring. To your defense systems, it just looks like the agent is doing its job.

An overprivileged agent can be capable of centralizing sensitive data that was previously segmented by design. If an attacker injects a malicious prompt, they can force the agent to aggregate these disparate pieces of information into a comprehensive report.

One industry for which this could be the top risk is healthcare. A system designed to assist with records management can be manipulated into compiling and exposing sensitive patient histories. If AI agents aren’t built with granular privilege checks to govern how data could be combined, they might lead organizations to significant regulatory consequences.

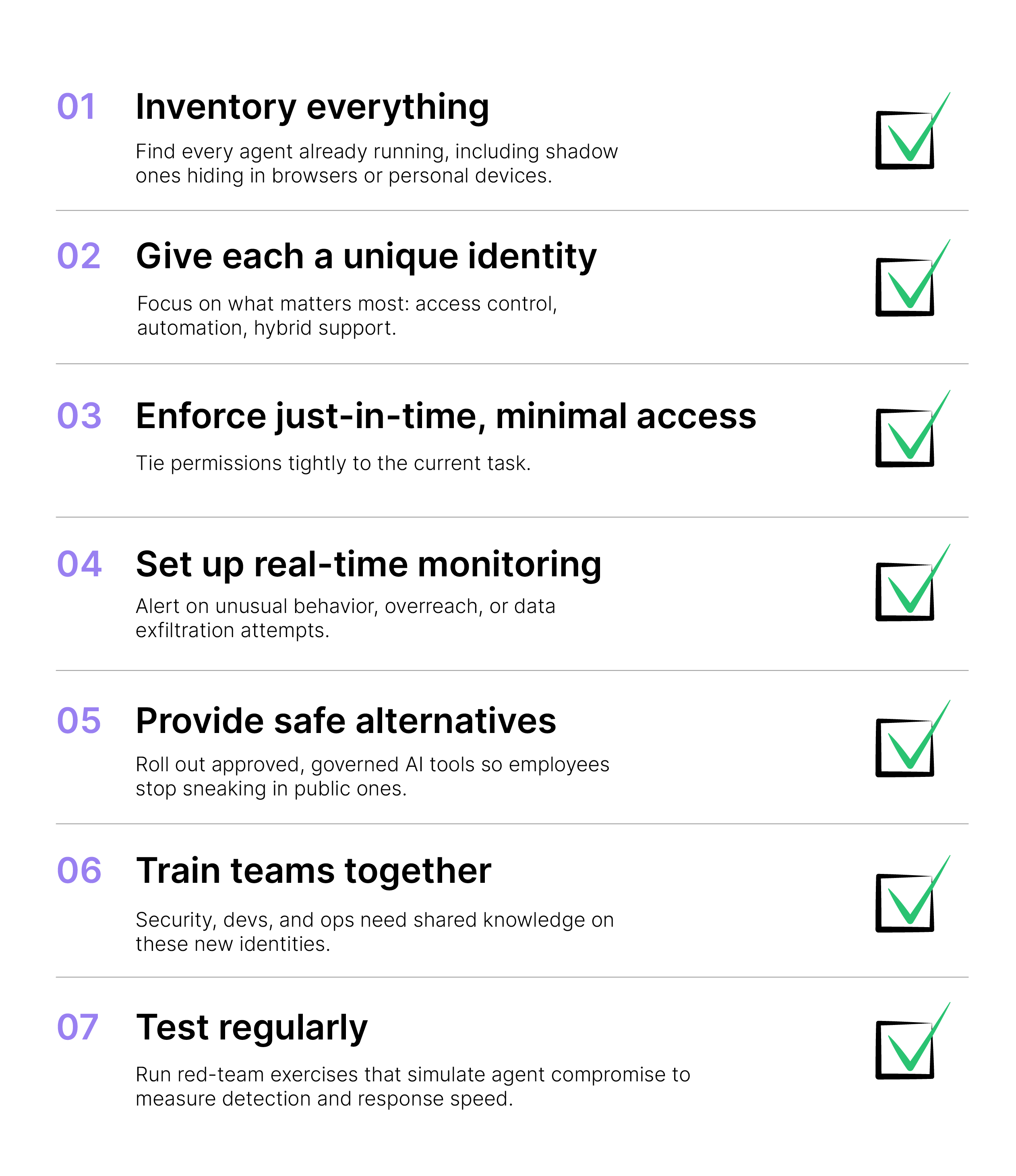

The Zero Trust playbook hasn't changed. Never trust, always verify; grant the least privilege needed; monitor everything continuously; assume breach is already happening or imminent. These rules work as well in 2026 as they did before. The difference is AI agents demand we apply them in a much more dynamic, granular way.

Traditional Zero Trust handles people and static machines reasonably well. Agents are different – they act independently, chain steps quickly, and scale fast. We can't just copy-paste old policies.

Forget long-lived access. An agent should get exactly the minimum rights required for one specific task and only for the seconds or minutes it needs them.

For example:

No standing credentials, no broad API keys sitting around for days. Use just-in-time credentials that appear on demand and disappear afterward. This stops the "keys to the kingdom" problem we saw in the first scenario.

Watch the full loop:

Filter outputs to catch and block sensitive data leaks before they leave. Apply Zero Trust to prompts themselves – verify the origin, context, and intent. Block anything that looks off. This creates an auditable trail so you know exactly what happened if something goes wrong.

Tools like Aembit (and similar emerging platforms) act as a central gatekeeper. The agent never talks directly to resources. Instead, every request flows through the platform, which checks:

Only then does it issue temporary, secretless access. Full logging follows every step. It's similar to how Conditional Access gates user logins in systems like Entra ID.

These platforms look promising because they enforce dynamic policies automatically. But central control also creates a single point that attackers will target. Test them rigorously. Don't rely on one vendor alone – build in redundancy and keep watching for new vulnerabilities.

Technology is solvable, the bigger hurdle is people.

Implementing Zero Trust is already hard for regular user accounts. Many organizations get it only half right or fail outright. Now layer on agentic AI, a brand-new identity type that behaves in unpredictable ways and multiplies quickly.

Your security, identity, and dev teams need:

No shortcuts or "we'll figure it out later."

Get these basics in place and the overprivileged scenarios we covered stop being inevitable disasters. Ignore them, and the cybersecurity breaches we fear in 2026 write themselves.

The rise of AI agents mirrors every major tech shift we've lived through: moving from paper files to digital documents, from basic phones to smartphones. Each time, the leap brought huge gains in speed and capability, but also fresh risks we had to learn and contain. Agentic AI is no different. Overprivileged agents, shadow deployments, and unchecked autonomy create real paths to cybersecurity breaches in 2026 if we ignore them.

Yet the long-term payoff is undeniable. These agents free teams from repetitive work, speed up decisions, and let people focus on what truly matters. The answer isn't to slow down or ban them – it's to meet the new reality head-on with dynamic Zero Trust, tight controls, and sharp team skills.We've handled bigger transitions before.

We'll handle this one, too. The rewards justify the effort, as long as we treat the dangers seriously from day one.